Computer Systems – Assignment 2

The Operating System [P2]

An integral part of any computer, the operating system is the main piece of software that runs. It performs a number of tasks, enabling other software to run and giving the computer reliability. Examples of operating systems include Linux (in the form of distributions like Ubuntu, RHEL and Arch), Windows and OS X.

The OS manages the data stored on the RAM. When data kept there is no longer required the operating system clears it. This allows other software to store data there instead, and prevents something called a memory leak (when data in memory is marked by software as needed, but is not, making it unusable until the computer restarts).

File management is also one of the roles of the OS. When the computer starts the OS typically mounts the storage devices made available to it by a variety of interfaces, and handles the reading and writing of data to and from these devices when the computer is in use. The OS likely also has software designed to identify different filesystems (such as ext4, FAT32 and NTFS) on the storage devices, and load the correct drivers to ensure functionality.

Peripheral input and the computer's output is largely managed by the operating system too. Input from the keyboard, mouse and other peripherals is usually handled by the operating system before any other software, allowing it to abstract the data arriving from these devices into an API that is easier to use for developers. The OS is also responsible for loading the correct drivers for any devices that have been connected to the computer, making is responsible for ensuring the user can see visual output from the computer on the monitor.

An example of a desktop environment in Linux, called LXDE, which runs on the X11 display server.

An example of a desktop environment in Linux, called LXDE, which runs on the X11 display server.

The interface that the user interacts with is, in modern times, almost always graphical, but alternatives do exist, such as the command line. It's the operating system's duty to start the interface when a user logs in, and is considered part of the operating system in the case of Windows and OS X.

A typical graphical user interface (GUI) includes a taskbar or panel at the bottom, top, left or right of the screen; desktop icons to launch applications and open files; a menu giving access to more applications; and windows for the programs that are running. LXDE, shown to the right, follows this convention while aiming to consume minimal amounts of the computer's resources.

A CLI, or command-line interface does not use images, windows or a mouse cursor. The keyboard is typically the only form of input, asides from a network connection. The entire screen is divided into small rectangles, each able to contain a single character. These cells can also hold two colour values – one for text and the other for background. The computer is used by inputting command via the keyboard and getting information back in text form. To a new user, this method of interfacing with the computer can seem daunting, but an experienced user will often feel more at home on a command-line than in a GUI. CLIs were typically used before the introduction of GUIs, but some still prefer to use a real CLI. Many still enjoy the benefits of using a command-line interface, but usually do so by means of a terminal emulator running inside a graphical environment.

Windows and Linux: A Comparison [M1]

Overview

The logo that has been used for Windows since February 2012. This was accompanied by an updated, coloured version to represent Microsoft the following August.

The logo that has been used for Windows since February 2012. This was accompanied by an updated, coloured version to represent Microsoft the following August.

Windows is the most popular personal computer operating system, and is available in some form on a number of platforms, including desktop, laptop, tablet and mobile. Although the operating system is suitable for most applications and is generally well developed, some of its core designs are considered by many to be flawed, and cannot realistically be changed when taking the scale of Windows into consideration. Windows was first released by Microsoft in 1985, its development having been driven primarily by Bill Gates. The latest version of the operating system is Windows 8.1.

Tux the penguin, the mascot of Linux. This is the most commonly used version, drawn by Larry Ewing, but many others have created their own reditions.

Tux the penguin, the mascot of Linux. This is the most commonly used version, drawn by Larry Ewing, but many others have created their own reditions.

Linux, although only occupying around 2% of the global desktop OS market share, is a versatile, scalable, stable operating system that can be modified with ease and encouragement from the community and developers. Linux provides the basis of many different desktop, server and mobile distributions (distros), and the 44 most powerful supercomputers in the world run some form of it. Popular operating systems based on Linux include Ubuntu, Arch Linux, RHEL (Red Hat Enterprise Linux, a paid, subscription-based OS), CentOS and Android. Linux was first released in 1991 and was created by Linus Torvalds, partially as an open-source clone of Unix, a proprietary operating system released by Bell Labs in 1973. As of the 17th September 2014, the newest version of the Linux kernel is release 3.16.3.

Releases

Windows has been through a number of significant releases, and may of the more recent ones have been updated during their lifespan by way of "service packs". These updates typically include a number of fixes to the operating system, particularly in regards to security, and often entirely new features. Some service packs only require the original operating system to be installed, whereas others require any previous service packs to be installed too. Past releases of Windows include 2000, ME, XP, Vista, 7 and 8, 8.1, and Windows 10 has been announced.

The Unity desktop interface running on Ubuntu 12.04 LTS, showing keyboard shortcuts.

The Unity desktop interface running on Ubuntu 12.04 LTS, showing keyboard shortcuts.

Linux has developed continually over the 22 years it has been released for. Thousands of developers other than Torvalds have contributed to the Linux codebase during this time, and new versions of the kernel are released frequently. Operating system developers often choose the most stable releases of the kernel to build upon, but as newer versions are released the opportunity to update is typically made available. Ubuntu, for example, is released every 6 months, with every other April release being a long-term support (LTS) release. Arch Linux, however, uses a rolling-release model, meaning that if all of the software packages on the system are up to date, the OS is at the newest version; there are no specific versions or releases like in the case of Ubuntu or Windows.

Mobile

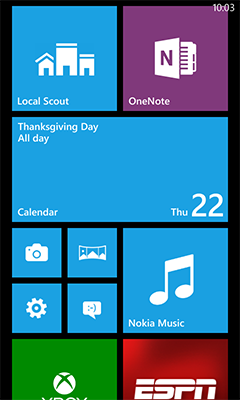

A screenshot of the home page of Windows Phone 8.1, showing information and giving access to apps and settings.

A screenshot of the home page of Windows Phone 8.1, showing information and giving access to apps and settings.

Windows for mobile was originally covered by Microsoft's Pocket PC operating system, which developed into Windows Mobile. With the release of Windows Phone 7 about a year after Windows 7, the Metro user interface was adopted by Microsoft. This was the first step in an almost entire rebranding of the company. Windows 8 was released alongside Windows Phone 8, and a number of handsets began shipping with the new mobile OS. Windows Phone 8 is based around a tile interface, which users can customise. There are a number of built-in applications, such as a calendar, music player and camera. There are also a number of other Microsoft apps available, such as Office OneNote and the Xbox app. The Cortana digital personal assistant was introduced in April 2014 in the UK and US to compete with Android's Google Now and Apple's Siri. A store allows users to download more software from third party developers that has been approved by Microsoft, but the selection of apps available is disappointing to most. The lack of apps deters users which in turn deters developers for building apps on the Windows Phone platform, creating an ever-worsening situation.

Linux provides the kernel behind almost all other major mobile operating systems, including Google's Android, Samsung and Intel's Tizen, Mozilla's Firefox OS and Amazon's Fire OS. Apple's iOS uses the Darwin kernel, maintained by Apple, which is based on the BSD kernel, not Linux. Linux is suited to mobile systems, as it's able to run a basic Linux system with very few computer resources. This means it can be crafted to its purpose by installing packages built by other developers, making an efficient product with minimal developer effort. This is one of the reasons Linux is used so widely on mobile devices.

Windows Phone 8's primary competitors are Android and iOS, both of which are based on Unix-like kernels. This is almost irrelevant to the user, however, as the UIs provide much the same functionality. Android has been known to suffer from performance issues, and this is primarily because of its extensive use of Java. Windows, in contrast, has been known to be unstable and poor in terms of features, which is partially due to the Microsoft NT kernel at it's heart. The third-party app store on Android is also a lot more comprehensive and leniently-moderated than the Windows store, which appeals to many Android users.

Source and Licensing

Windows is a proprietary, commercial, closed-source operating system. This means that the technologies used by the developers at Microsoft are kept a secret from the user. It also means that in order to legally use the software, one must own a license key, whether bought by the user (a Windows 8.1 license costs £70) or with a new computer. Lastly, the source-code written in C and C++ is not shown to the public. This makes modification opportunities of the OS a lot more restrictive, and means that techniques used by the developers of the operating system cannot be shared. It also means that intentional vulnerabilities, called backdoors, can be implemented into the OS without the public knowing.

These attributes strongly contrast with those of Linux. From the very beginning of its development, Linux was intended to be open-source. The source code can be viewed on Torvalds' Linux GitHub repository, for example. The OS is 96% written in C, and the remainder in Assembly language and various other programming languages. Linux is free and so are the vast majority of its distros, but donation is often encouraged. Because of the license that accompanies Linux, The GNU General Public License, it's legal for anyone to modify Linux and re-distribute it as their own, commercially or not. Because the source code is freely available, the community can also provide a huge about of help in identifying and even fixing bugs in the system. Anyone is able to modify the source of Linux and suggest their changes as a "pull" request on GitHub, that could be approved by the developers and implemented.

Desktop Experience and Customisation

The Windows 10 desktop experience, showing multiple workspace functionality new to Windows and the Registry Editor open.

The Windows 10 desktop experience, showing multiple workspace functionality new to Windows and the Registry Editor open.

Windows is designed to be more complete as a functional operating system out-of-the-box. For this reason, a number of software packages such as a file explorer, process manager, text editor, taskbar, menu and login manager are all included. Although they add to the size of a fresh Windows installation, they are well integrated into the rest of the operating system, and are designed to work alongside each other. For this reason such software can be built more efficiently and can provide a more concise user experience, while also – and arguably more importantly – making large-scale software development for the OS a lot easier. Despite this, the standards used in the development of this software generally do not pass into the development community, partially as a result of the closed-source nature of Windows, and this leads to a more inconsistent experience once a user starts to download software. Also because the software is tightly interconnected, it is a lot more difficult to target specific parts in order to customise, swap or remove them. This principal differs greatly from the ideology behind Arch Linux, for example.

A screen shot of bspwm, a highly configurable tiling window manager often used on Arch Linux by enthusiasts. Unlike with most floating windows managers, windows are evenly fitted onto the screen leaving no space unused.

A screen shot of bspwm, a highly configurable tiling window manager often used on Arch Linux by enthusiasts. Unlike with most floating windows managers, windows are evenly fitted onto the screen leaving no space unused.

In Linux, a scale exists between the distros that take a more Windows-like approach to the software they include and the more minimalist options. Closest to where Windows would rank on this scale is perhaps Ubuntu. Frowned upon by much of the Linux community, Ubuntu includes a great number of packages by default, and many view this as bloating and not adhering to the core principals behind Linux. The inclusion of this software means that Ubuntu Desktop consumes over 5GB on disc when installed. Although Ubuntu fills a lot of disc space, the packages it includes allow a user to complete common tasks without having to install extra packages. Ubuntu also provides an easy-to-use method of obtaining more software, utilising APT, from a central repository operated by the operating system's developers, Canonical. At the opposite end of the scale one finds the minimalist distros such as Arch Linux, which installs in less than 700MB of space. *The Arch Way* even details the principals behind software development for Arch, and is honoured by a number of developers, even in unrelated fields. Arch Linux is designed to be crafted by the user into the OS that best suits their needs. Effectively, only the packages they choose to install are installed. The OS is even distributed without a display server, meaning interaction with the computer is limited to a command-line interface until the user installs one (typically X11). Linux distros are almost entirely open-licensed, meaning anyone can download the source code of a particular piece of software, customise it, and them compile it for their system, and all without breaking copyright law.

Strengths

The software available for Windows is the main draw for many people. A lot of small but necessary software packages are built only for Windows, such as some high-performance hardware tuning software. This means that the user must either run Windows natively or virtually, or use a Windows software emulator for Linux or OS X, such as Wine. Neither solution for Linux/OS X is ideal, meaning Windows is sometimes the only option. Windows is also less likely to break without the user being able to fix the problem, and easier to install and get working as desired without much experience.

Linux offers far more stability and more efficient operation, but can be difficult to configure. That said, a sys-admin can get used to using Linux in a short amount of time, and a well configured system can require very little maintenance. For example, Linux could be used on a network of public-use computers. Linux would be secure from malicious users by design, and could be set up in such a way that little experience would be needed to keep the computers' software up to date. Linux also offers comprehensive driver support out-of-the-box, and offers open-source alternatives for many manufacturer-developed drivers, such as for video cards. Linux is not susceptible to viruses like Windows is, making Linux a good choice for computers that are only used for basic tasks.

Weaknesses

Windows is not a scalable platform, making it unsuitable for servers or data centres that are required to handle great amounts of traffic. Windows is also prone to errors that a well-configured Linux installation is unlikely to be; for example, missing files and invalid registry entries frequently cause trouble. Windows is also closed-source and copyrighted, making it virtually impossible – not to mention, likely illegal – to modify the OS to any significant extent. This also means that intentional vulnerabilities, called backdoors, could be implemented without the community knowing, potentially violating the privacy of users. Windows is only updated with new versions of the OS every few years, and minor bug fixes and security patches in between. This means that big changes to the OS are not frequent, and the users of the OS are rarely given a voice in the direction that the software takes.

The main drawbacks of Linux are that software developers (such as Adobe, Maxon and AutoDesk) do not choose to support the platform, primarily because of the many different distributions that handle tasks in different ways. As well as this, Linux systems can seem complicated to some users, but having an open mind and being prepared to learn is enough for most new users.

Removable Storage and Devices

In Windows, when a user connects a new device to the system, the OS will search automatically online for suitable drivers, as these are not included with the system by default. This is almost always successful, but outdated, incomplete or unofficial versions can sometimes be installed as as result. In the case of removable storage, such as a USB drive or compact disc, AutoPlay often appears too, prompting the user as to what they would like to do with the device, providing a number of options. Windows also mounts filesystems that it finds and can read on removable storage without asking the user, as this is almost always desired.

In some Linux distros, such as Ubuntu and Elementary OS, AutoPlay alternatives do exist, making common tasks a lot easier for inexperienced users. Other distros, such as Arch, however, may not even show any sign of recognising the device. Linux includes a number of open-source drivers by default, supporting almost all devices a user may encounter, though, meaning devices that haven't been used with the system before can be used almost immediately. As well as this, the system usually does not mount any available filesystems, as this could potentially cause damage, until the user asks to browse the contents of the drive.

Performance

Windows has been developed over a great amount of time, and it's popularity and closed-source nature has lead to the OS growing in size and decreasing in efficiency. The minimum requirements for the desktop version of Windows increase with every release, and the OS is not as optimised as it could be on mobile. Windows is notorious for slowing down in general after a fresh installation of the OS, and this is primarily due to the way it which it stores files on disc. This is explained later in the Software Utilities section.

Linux installations are known, in contrast, for remaining as fast as they ever were on a particular system, even after months or even years of heavy usage. Linux is also written by particular developers that have be approved of by Torvalds himself, and badly written code can lead to developers being humiliated by Linux's creator.

Hardware Management

Screenshot of the Device Manager in Windows 8, showing different categories of hardware available to the OS.

Screenshot of the Device Manager in Windows 8, showing different categories of hardware available to the OS.

In Windows, device drivers can be installed by the system, but this is not always reliable with certain pieces of equipment. Once drivers have been installed Windows offers functionality to update or downgrade the driver software; disable the device if the user should wish to; and view several pieces of information about the device which can be useful in troubleshooting problems. The Device Manager is a part of Windows which typically works well, and is useful in diagnosing problems.

In Linux hardware can be more of a problem to get working with a new installation. Granted that the hardware is unlikely to change frequently, this is generally only a problem when putting Linux on a computer for the first time. Open-source, reverse-engineered graphics drivers exist for the vast majority of AMD, Intel and Nvidia video chips, and these have even been shown to offer superior performance in some cases. Although all three vendors do provide drivers for Linux, only Intel's are open-source and offer high quality support. It's even discouraged by some to use the closed-source drivers, and they will never be included with Linux by default because of their source model. Intel is working to change this, and is developing drivers for Linux, particularity aimed at mobile devices, that is open-source. If the manufacturers of dedicated graphics chips were to adopt a similar tactic, Linux would be very likely to gain a number of users looking to game on the platform.

Software Utilities [P3] [D1]

Advanced system administrators and developers often have a kit of applications and utilities that they use as part of their work. Some of these may be installed on an entire network of computers, in order to improve their security or efficiency. Home users can also choose to install from a variety of software packages to improve their computer's performance. Some common categories of such software are listed below.

Registry Cleaner

The Registry in Windows is an integral part of the operating system, essentially functioning as a database of name/value pairs. It stores information about software installed on the system and most system settings are kept there too. This allows data the type of data the Registry keeps to be stored in a single location, making the process of backing it up due its great importance a lot less laborious.

Despite this, the Registry is still considered by many to be one of the weakest aspects of Windows on a whole. In Linux, for example, configuration files are typically stored in one of two places: the user's home directory in a hidden sub-directory or as a hidden file; or in the sub-directory etc of the root directory (equivalent in some ways to C:\ in Windows). This means that the configuration of the system is kept in a single location while still being separated into multiple files. This means that it is still easy to back up any configuration file you wish and maintain the convenience of everything being in one place, while also getting the security and stability offered by separated files. A number of faulty entries in the Windows Registry can cause the entire database not to function, whereas when using text files for configuration, it's incredibly unlikely for a fault in one section to affect another.

As Windows can only be installed on NTFS-formatted partitions, the need for defragmentation applications is greater than with most operating systems. The Registry, as it is frequently written to and expanded in very small increments, is a large contributor to filesystem fragmentation.

Because of the vulnerability of the Registry and its important to a smooth-running and secure Windows system, a number of applications exist to clean the registry and fix common errors found it. This often consists of removing unused keys/entries that are no longer required and are therefore redundant. An example of this can often be found when software has been incompletely removed from the system. Packages that use their own file formats and file extensions will usually create an entry in the registry pointing the Windows installation to the program, in preparation for the user opening a file with an otherwise unknown extension. When programs are removed, the entry for the filetype(s) used by the piece of software may be left behind, which can have a small performance impact on the computer.

Cleaners

Many different software developers have created Registry cleaning software for Windows, offering to boost the performance of a system. These are most effective in home environments, particularly when the system is not secured well and a lot of people use the computer. This naturally leads to a more cluttered filesystem and the creation of more redundant Registry entries. Data in the Registry can also be incorrect, or have been placed there by malicious software. In these cases, a Registry cleaner should remove the entries.

Registry cleaning improves performance of a system for a number of reasons. Although the Registry makes up a very small part of a Window installation in terms of storage space, deleting redundant entries in the Registry does clear disc space, which in turn lowers the chances of filesystem fragmentation. In the event of the user running a defragmenter, a clean registry will allow the process to complete in a shorter amount of time. Having less information in the Registry will allow the operating system to access the Registry database quicker too, as it will occupy a shorter section of physical space on the system drive. When using hard-disc drives, this will mean that the read-write head does not need to move as far across the platter, saving time overall. In the case of erroneous file associations, for example, entries will cause the operating system to waste time looking for executables that don't exists and then throw and error when it isn't found. This is not necessary, and cleaning the Registry can prevent CPU and memory resources being used without need.

A software package commonly used to clean the Registry on a regular basis is CCleaner. Offered free for download by its developer Piriform, the program scans the Registry and often finds fixes that can be made. After only a few months of heavy use, a Windows Registry can contain hundreds of potentially performance-impacting values, which marginally slows the process of adding more values as system configuration is changed and software operates. CCleaner also allows the user to run a standard performance optimiser, which looks for cached files, browser cookies and temporary data that can be safely deleted. Performing this kind of cleaning process alongside a malware scan, before cleaning the registry and defragmenting the Windows partition can bring an installation close to the level of performance experienced when freshly created.

Virus Protection

Windows, due to its popularity and poor security, is subject to attack from millions of viruses. There are a number of different sub-categories when it comes to viruses, but almost all have malicious intent, and typically damage a Windows installation unless handled by anti-virus software.

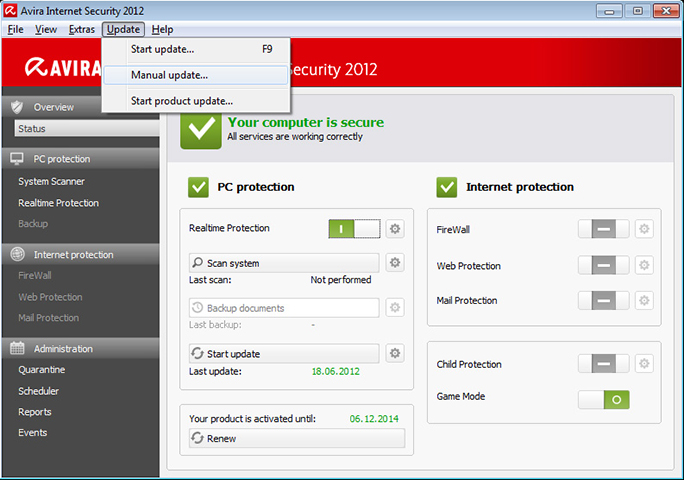

A screenshot of Avira running on Windows, showing the option to manually update the software, as opposed to relying on periodic, automatic updates.

A screenshot of Avira running on Windows, showing the option to manually update the software, as opposed to relying on periodic, automatic updates.

Common anti-virus packages include Avast, AVG, Avira, Comodo, Kaspersky, McAffee and Microsoft Security Essentials (now part of Windows Defender). Some are free, while others require a license fee or subscription in order to use the software. With the release of Windows 8, Microsoft Security Essentials was bundled with the OS, and support for installing MSE on Windows 8 not given (as doing so would have no effect). MSE was continued for Windows 7, Vista and XP, although most people with any experience in the field of security would be unlikely to recommend it.

Virus detection occurs in a few different ways, explained below:

Frequent, scheduled scanning is often recommended by experts and the developers of anti-virus software. Scanning involves the program looking in detail at every file it's told to, usually every file it has permissions to read. When a file is suspected of being a virus, the software will likely warn the user, quarantine the file (move it to a secure part of the hard-drive that can easily monitored) or immediately delete it in certain cases.

Realtime scanning is also used to detect infections almost as soon as they exist on the hard-drive. By monitoring the activity on the storage devices available to the computer, anti-virus software is able to identify threats in places such as the downloads folder very soon after they appear. This is effective in targeting infections before they can damage the system.

In order to identify a known virus or determine whether a file is malicious or not, anti-virus software typically utilises a malware database maintained by the developers of the software, a process known as statistical analysis. This database includes information about a vast number of known infections, and allows the software to check each file against the known characteristics of malicious files. For this reason, anti-virus software must typically be updated as frequently as on a weekly basis, if not more frequently than that. By updating, the software downloads the latest changes from the database, making it as effective as possible in removing the files that it needs to. During the process, the antivirus software may also upload information about potential threats identified by heuristic or emulation analysis, described below.

Anti-virus packages also use a second form of virus detection, called heuristic analysis. This is used to detect infections that have not previously been identified and profiled on the virus database. The analysis uses virtualisation to place the file being scanned in a controlled environment. This is necessary as most programs are compiled machine code, which cannot effectively be converted back into source code (given the source code, it would be simple to detect whether the program was malicious or not). The anti-virus executes the program while isolated and monitors its activity in relation to the rest of the system. For example, if the file attempts to overwrite or remove important systems files, the executable will likely be marked as an infection, and sent to the maintainers of the anti-virus package for further analysis.

Anti-viral software uses a number of techniques to improve the performance of the system it's running on. The removal of viruses is not always as simple as the deletion of offending files. In the case of some modern infections, the configuration values of browsers may need to reverted to their former state as default search engines, home pages and new tab pages are often changed as part of phishing or advertising scams. Even once a virus has been removed, these settings can remain effective and not only irritate the user but open them to identity theft and more severe computer infection.

Once viruses have been removed, there is simply less software running at any one time. With fewer processes running, CPU cycles and space on the RAM are not consumed unnecessarily, meaning the computer does not need to resort to slower swap-space memory, for example, to maintain full functionality. Although anti-virus packages do worsen performance, this is negligible as the security and protection they offer improves performance in the long term. Malware can also cause more permanent damage to the system once executed, meaning certain functionality may become broken. When the operating system must troubleshoot errors and fails to complete tasks that rely broken components, the system performance suffers. This can be partially fixed by many anti-virus packages, but may also require a Windows installation disc to repair. Once repaired the system should resume optimum performance.

In my opinion, traditional anti-virus software is mostly out of date. Using a combination of CCleaner and Malwarebytes, for example, and running them both on a monthly basis will keep a Windows installation clean and fast. Disk defragmentation can also be used to improve performance. I think the reason many anti-virus software companies manage to stay in business is due to the ongoing belief in many that third-party software is required in order to keep a system safe, as was the case before Windows 8.

Linux and OS X, as well as most mobile operating systems, are essentially free of viruses. Their design makes gaining superuser or administrator privileges a lot harder than on Windows. The programs that can be downloaded are often available via curated repositories hosted by the developers of the operating system; Google or Apple, for example. This makes malicious software a lot harder to install unknowingly, and users are even discouraged from downloading software from other parts of the internet, as per tradition.

Network Firewall

Traffic moves between computers on a network by means of IP, or Internet Protocol. There are two major versions in use today, IPv4 and IPv6. The world is undergoing a transition into the second form as IPv4 addresses have started to run out. IP traffic is made up of transport-layer protocol data, such as that of TCP, UDP or DCCP, and these (particularly the foremost) contain application-layer protocol data, like that of HTTP, SSH or SMTP. Each of these application-layer protocols, of which there are thousands, require a port to use. A computer typically supports 65,535 ports for each transport-layer protocol, and data must enter and leave a computer via one of these ports.

An illustration showing the location of a network's firewall; between the local area network and wide area network.

An illustration showing the location of a network's firewall; between the local area network and wide area network.

A firewall allows data to be filtered depending on what port it's using or what headers are visible to the router, but the latter requires DPI (deep package inspection) to be used and therefore raises privacy concerns. DPI is also not realistically feasible when encrypted data is concerned, as innocent traffic must be allowed to pass through quickly in order not to create delays for users, whereas decrypting data could take years. Some system administrators choose to block encrypted traffic, except that on HTTPS and mail ports. Firewalls are often used to filter internet connections in a number of institutions and companies, preventing students and employees from accessing content that is not related to work. Listening to a port for incoming connections is also often prevented by a firewall installed on the computers used by students and employees, to stop them from being able to host any kind of server on the computers that could be accessed elsewhere on the network.

Firewalls in business and on large networks often consist of separate, physical servers on a rack, dedicated to managing internet traffic, but individual clients often include their own software to perform the functions of a dedicated firewall too. Windows includes Windows Firewall, which can be used on public networks as a form of protection from denial-of-service attacks, however unlikely these may be. Firewalls on large networks serve to protect the clients and act as a gateway between the intranet and the internet. Often, public facing services, such as a virtual learning platform for an educational institution, are placed on servers in a DMZ (de-militarised zone), outside of the firewalls cover. This makes them more vulnerable to attack, but means that their compromisation by an attacker should have no effect on the integrity of the servers holding important and confidential information.

Minor changes to firewall settings are sometimes required when people decide to use third party software that uses a non-standard protocol. By default most ports are typically closed as they would otherwise put the computer at risk. When hosting a server, particularly for gaming, one must add a firewall rule to open a specific port, which allows the server software to listen for connections. If someone wants to host a webserver, they must open ports 80 and 443 to allow HTTP and HTTPS connections respectively. When hosting a server for Minecraft, for example, one will likely have to open port 25565. Servers can often be configured to listen on different ports allowing multiple servers to run on a single machine, and this can also be used to gain another layer of security against denial-of-service attacks. Attackers can use port scanning software to check the response given by a computer when connected to via particular port, which can reveal the ports being used for different services if not standard.

On most home networks the firewall present on the computers is largely unneeded as is more likely to create bother. This is because because of the firewall software present on the router. Routers typically block all unrecognised incoming traffic, but rules can usually be configured for gaming in the router's settings, under port forwarding. This allows incoming traffic to be routed to a particular computer, and often for the data to be translated to a another port or range of ports. Since most routers support both TCP and UDP, a theoretical 131,070 connections could be processed by a router, and each sent to a different computer on the network.

Firewalls are intended to mitigate bulk and tailored attacks, as opposed to virus infections. For instance, when running SSH on a public server, listening on the default port of 22, one is likely to notice a great amount of traffic entering the system from around the world attempting to gain access to common usernames. For example, webservers are often run as the www-data or http users in Linux, which improves security in the event of a password breach. By using a firewall, traffic bound for particular ports can be rejected or dropped, meaning that no access can be gained at all. This is often used to prevent external access to servers running on a machine, such as a database, which should on accessed locally. Firewalls can be used to block traffic from particular hosts after a certain number of failed authentication attempts, which could otherwise slow down the system.

The main way in which firewalls help to improve the performance and reliability of a server is by preventing the effectiveness of (D)DoS attacks. IP addresses or ranges can be blocked, meaning that any connections from servers using those addresses are disallowed access under any circumstances. If managed well, a firewall can be very effective, ensuring that a server remains online and doesn't become overwhelmed with traffic.

Disc Defragmenter

Fragmentation

Storage devices in computers and mobile devices must be formatted to hold a particular type of filesystem. Windows uses NTFS (New Technology File System) formatting, developed by Microsoft. Linux, in contrast, typically uses ext4 filesystems, and removable storage devices are often formatted as FAT32. Windows suffers worst from fragmentation because of the way in which it stores files on disc.

When a new file is created on a new filesystem, it's typically placed at the beginning of the storage space. In Windows, when another file is created on the drive, it places it immediately after the previous one, not leaving space between the two files. This method is perfectly efficient for a read-only or otherwise unchanged filesystem, but problems start to arise as more files are deleted, resized and created. If the original file in the system needs to expand, Windows will add the new data after the second file, as it would otherwise need to move it, a time-consuming process. It alters the record in the MFT (*master file table*), showing the fact that the first part of the file exists at the beginning of the drive, and the rest after the second file. Later, if the second file is deleted, the only file on the disc is unnecessarily stored in two halves, which reduces the disc read and write speeds and computer performance; the first file can be described as fragmented. When a computer has been in use for only a matter of months, the main storage drive can become heavily fragmented, hurting the performance of the computer.

Disc defragmentation is strictly a hard disc only performance improvement. Defragging a solid-state drive can even have damaging effect on the hardware as they can handle a much lower number of reads and writes to any particular sector in their lifetime. Complex algorithms running on an SSD's controller chip scatter the data across the different NAND chips so as to preserve the life of the drive. Because there are no moving parts in an SSD having files fragmented like this has virtually no effect.

Disc defragmentation speeds up a computer system by improving the rate at which files on the hard-drive can be interacted with, both in terms of being read and being written to. As stated, this only applies to hard-disc based drives, as opposed to the more modern SSD alternative. This is because these drives have moving arms that travel to different sections of a platter where particular information must be read or written. SSDs are chip-based, meaning that it takes no more time to access data on one part of the physical device to another. Once a drive has been defragmented, the read/write head is able to barely move, even when handling very large files. This eliminates the delays caused by the head being required to move drastically halfway though reading or writing a file. Although the time taken by the drive to move the head is very short, a badly fragmented drive causes delay that adds up. Once a filesystem is defragmented, a noticeable performance boost can be felt in terms of boot and file saving times.

On Linux systems, the ext2, ext3 and ext4 filesystems prevent fragmentation from happening, also by scattering files across the disc. The first file to be added to the drive will sit at the beginning of storage space, but the system will leave a gap between the end of the first file and the second file to be added to the volume. This means that if the first file needs to expand it can do so without another file getting in its way. Because files almost never become fragmented until the drive is around 80% full, a Linux system can maintain high performance for years after the OS was installed. Some users find that Windows must be re-installed every six months to truly fix malware, fragmentation, registry and bloatware issues, and to keep the performance of the system at its best, but this is almost unheard of when using Linux.

Defragmentation Utilities

An animated GIF showing the process of defragmentation on a disc. The squares represent blocks on a data storage device.

An animated GIF showing the process of defragmentation on a disc. The squares represent blocks on a data storage device.

Included with Windows is a Disc Defragmenter, which allows the user to evaluate the fragmentation of a particular partition, as well as run the defragmentation program. Some users opt to rather use third-party software, such as Defraggler from Piriform, the creators of CCleaner. These programs both do much the same thing, detailed below.

To begin with, the software will likely assess the degree of fragmentation on the drive, and then begin to move files. In order to efficiently and safely defrag the drive, there must still be free space on the drive, otherwise a large amount of reliable RAM would be required. The program will find the first file that is known to be fragmented, and it will move the file obstructing it from growing to free space at the end of the disc. It'll then locate and move the remaining fragments of the first file into place after the first part, defragmenting it. As it does this, it will constantly update the record of files on disc (called the MFT on an NTFS partition). This means that a minimal amount of data will be lost to corruption if power to the system is lost or the application crashes; if the process does not complete and cleanly exit. Once the first file is stored in a single section of the disc, the defragger will move the first fragment of another file to the space after the first file. As it did before, it will move any obstructing fragments elsewhere temporarily, and then return them to a single section of drive space later.

At the end of the process, all of the files stored are in consecutive sectors of the drive. In practice, this means that the read head of the hard disc only needs to move to the beginning of a file it must read or write, and can then move steadily. In the case of a fragmented filesystem, the read/write head is forced to move rapidly across and between the different platters, which is bad for the drive's health and the data transfer rates.